Solving puzzles with computers.

Introduction

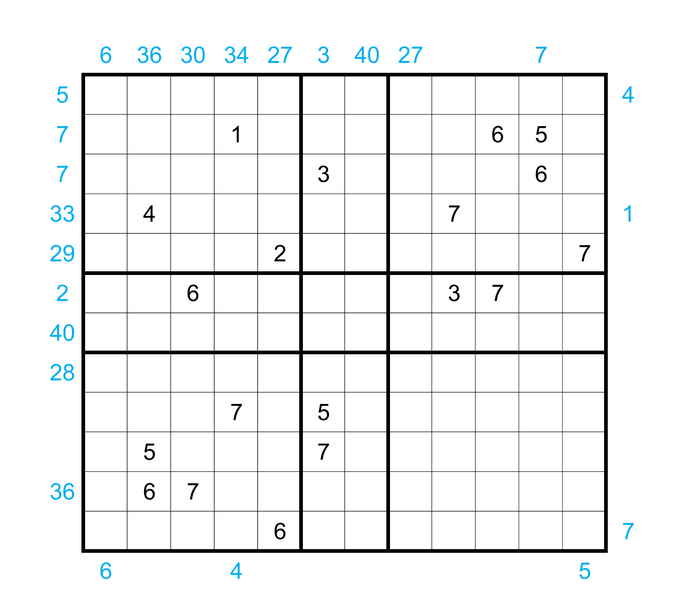

Earlier in the year I stumbled upon a puzzle posted by Jane Street called the 4-in-1 24-7 puzzle . The puzzle is in the front matter of this post. On a first look, and on first reading through the rules for the puzzle, it appears cartoonishly complicated. But, if you’d like to take your own crack at the problem, there is a simpler 24-7 puzzle which can be solved first (as I did), before tackling the 4-in-1 version.

I’m not sure if the creators of the puzzle imagined people would print the puzzle onto paper and solve it by hand, but I immediately thought to myself: I am way too lazy for that. So instead I naturally wanted to get a computer to do all the work for me…

Throughout my short academic career, I generally took much more interest in continuous optimization than I did in discrete optimization. Almost everything I learned about discrete optimization I learned ages ago from this Coursera course , and the application of semidefinite programming to approximating MAXCUT . That being said, I knew that constraint programming was a natural approach to solving puzzles, the 24-7 puzzles being no exception.

Interestingly, I’m no stranger to algorithmic puzzle-solving. Many years ago, my girlfriend showed me an optical illusion puzzle that involved fitting a 4x4 grid of tiles with matching patterns on adjacent edges – you had to figure out how to place the tiles so that the patterns on the edges all matched.

Figure 1: Ruining the fun of this puzzle with a computer

I wrote a simple C++ program to basically brute force this puzzle – I remember running it for about a day on my ancient laptop. Armed with the tools described in this blog post, it could probably be solved in under a second, and the modeling and implementation could be done in a couple hours (rather than the whole weekend, as the C++ program probably took me).

Constraint Programming

Constraint programming is an algorithmic methodology that targets problems that have a discrete solution space, such as arranging puzzle pieces, rather than continuous problems like optimizing the shape of an aluminum can. Moreover, in contrast to optimization, the focus is upon simply finding a feasible solution that satisfies a given set of constraints, rather than optimizing an objective function subject to those constraints.

The approach involves systematically enumerating constraints that a solution must meet and which, if met, constitute a solution. A search algorithm then explores the solution space and either successfully returns a solution, or proves that one does not exist.

An example of how constraint programming might be used is in solving Sudoku puzzles. For instance, a common technique involves initially considering all numbers from 1 to 9 as candidates for each square. You then iteratively narrow down these options based on existing numbers in the grid, filling in a square when only one candidate remains and backtracking when no candidates are left. The process of checking how a decision affects the constraints is called constraint propagation and it is one particular algorithmic building block for trying to tackle constraint programming problems.

I followed a strategy like this to hack together a Sudoku solver , similarly inspired when I saw my girlfriend working on a book of Sudoku puzzles… Perhaps this is a theme 🤔 … At the time I was rather satisfied to have been able to solve Sudokus in a couple hundred milliseconds (so slow largely because of a rather inefficient representation, and writing it in Python), though there are some rather fast programs that can solve Sudoku on the order of microseconds.

These examples are ad-hoc, but I am more interested in general approaches. Similarly to how CVX makes it incredibly easy to experiment with convex optimization problems, there are general modeling tools that can be used to model and solve whole classes of discrete optimization problems. Indeed, if the constraints can be appropriately described, general purpose algorithms can be applied to search for feasible solutions.

For the rest of this post, I will describe two such general tools: Integer Linear Programming, and Satisfiability Modulo Theories, and how they can be used to solve constraint programming problems.

Integer Linear Programming

A concrete and practical means of describing constraint programs is by means of integer linear programming (ILP). Specifically, a feasibility ILP:

\begin{equation} \begin{aligned} \text{find}&\ x\\ \text{such that} &\ Ax \le b\\ &\ x_i \in \mathbb{N}_0. \end{aligned}\notag \end{equation}

This looks a lot like an ordinary linear program \(\text{min}\{c^{\mathsf{T}} x\ |\ Ax \le b, x \in \mathbb{R}^n\}\) except there is no objective (practically speaking, just set \(c = 0\)) and there is an integer constraint \(x_i \in \mathbb{N}_0\). That is, each element of the solution vector \(x\) must be an integer (including zero): \(0, 1, 2, \ldots\)

While linear programming problems are convex optimization problems, and thus entirely tractable, ILPs are NP-complete. Theoretically, there is no known “fast” algorithm for solving ILPs in general, but practically speaking, we can usually still find optimal solutions (or good suboptimal approximations) fast enough for it to be useful.

Remark (Totally Unimodular Matrices): If the matrix \(A\) in the ILP constraints is “totally unimodular”, then the LP solution and the ILP solution coincide. This is an important and tractable special case .

Another special case of ILP is what one might call Boolean Linear Programming (BLP), but is usually actually referred to as \(0-1\) linear programming. In this case, we have the constraint \(x_i \in \{0, 1\}\) (equivalent to just including the constraint \(x_i \le 1\) in the ILP). To give a sense of how powerful ILP is, the reader is encouraged to think about why the following constraints encode logical operators as BLP constraints:

- logical-and: \(z = x \wedge y\) is encoded as \(z \ge x + y - 1, z \le x, z \le y\).

- logical-not: \(z = \neg x\) is encoded as \(z = 1 - x\)

Given that we can encode AND and NOT, we can encode any Boolean expression – these operators together are universal. Here’s a couple more examples:

- logical-enforcement (if x then y): \(x \implies y\) is encoded as \(x \le y\)

- logical-implication: \(z = (x \implies y) = y \vee \neg x\): is encoded as \(z \le (1 - x) + y, z \ge 1 - x, z \ge y\)

- This looks complicated, but is nothing but the combination of logical-or and logical-not.

The reader is encouraged to try to encode some other logical expressions, e.g., exclusive-or, logical-or, etc.

I’ll shortly use this modeling framework to encode and solve the (vanilla) 24-7 puzzle.

Satisfiability Modulo Theories

Similarly to ILP, Satisfiability modulo theories (SMT) provide a means of implementing constraint programming. SMT is a powerful methodology that generalizes Boolean Satisfiability (SAT) to allow the inclusion of arithmetic (among other things) in the constraints. For example, an instance of a SAT problem is to find a Boolean assignment to the variables \(x,y,z\) such that

\begin{equation} \begin{aligned} (x \vee \neg y \vee z) \wedge (y \vee \neg z) \end{aligned}\notag \end{equation}

evaluates to True, or prove that such an assignment does not exist.

An example of an SMT problem is to solve the (nonlinear!) system of inequalities

\begin{equation} \begin{aligned} x^2 + y^2 &> 3\\ x^3 + y &< 5 \end{aligned}\notag \end{equation}

over the integers. The inclusion of arithmetic makes SMT more general than SAT.

SAT and SMT both have rich mathematical theory surrounding them. However, I am primarily interested in SMT as a practical engineering tool, so I admit to knowing almost nothing about the theory. Indeed, I hardly know anything at all about theoretical computer science, and everything I’ve learned about SMT so far comes from this very practical book . Computationally, a library for doing SMT programming is the z3 theorem prover , which has convenient Python bindings . That same page has plenty of examples, as does this guide .

Here’s a quick example that solves the above nonlinear system:

|

|

|

|

I’ll shortly use z3 to solve the complicated 4-in-1 24-7 puzzle.

Solving the Vanilla 24-7 Puzzle

Now the fun begins. Here I’m going to apply integer linear programming to solve the “vanilla” 24-7 puzzle, which looks vaguely similar to a Sudoku:

Figure 2: Vanilla 24-7 Puzzle

The rules are described thus:

The grid is incomplete. Place numbers in some of the empty cells below so that in total the grid contains one 1, two 2’s, etc., up to seven 7’s. Furthermore, each row and column must contain exactly 4 numbers which sum to 20. Finally, the numbered cells must form a connected region, but every 2-by-2 subsquare in the completed grid must contain at least one empty cell.

Read this over a few times – the first couple of constraints sound straightforward, but the “connected region” constraint is difficult to even wrap one’s head around. It means that the cells which are filled in need to form a connected set – there are no “islands” of numbers. We’ll see these in detail as I show how they can be encoded as constraints in an ILP.

First, I am using ortools , so let’s do some setup:

|

|

This sets up a Constraint Programming model called model and a solver. We now just need to add our constraints!

Remark (performance): I generally first write code that is readable and easy to debug and understand, only trying to optimize when it is necessary. I’m quite directly describing the constraints of the problem, and not trying to do anything clever. I’ve introduced a number of variables which I believe add clarity, but which may, technically, be redundant. Fortunately, it is quite likely that the ILP solver is fairly capable of recognizing and eliminating obvious redundancies. That being said, the way in which a program is encoded (in any modeling language) can potentially have a significant impact on performance, so it can be worth it to revise and refactor if one is not getting the throughput they need.

Remark (modeling tip): While it may be counter intuitive, it is actually possible that increasing the number of constraints in the problem can actually improve performance. The reason being that the search space is more constrained.

The first variable I’m introducing is what I’ve called a panel. This is a \(7 \times 7 \times 7\) grid of \(\{0, 1\}\) indicators where panel[k][i][j] = 1 if and only if the cell at position (i, j) is equal to k. Let’s first encode all the static assignments using this variable.

|

|

The function model.Add adds a constraint to the ILP.

Notice that the constraint sum(panel[k][i][j] for k in K) <= 1, along with the fact that panel[k][i][j] is either \(0\) or \(1\), ensures that just one of these indicators can be active at a time.

Another obvious variable to introduce is the grid, which is a \(7 \times 7\) array of variables equal to the value of that cell.

|

|

Since only one panel indicator can be active, sum(k * panel[k][i][j] for k in K) must be equal to the integer value that is being encoded.

This grid variable makes it easier to encode the row and column sum constraints.

|

|

And, the reason I introduced the panel variable in the first place is because it makes is easy to encode the counting constraint:

|

|

The constraint that each row and column contain exactly four non-zero numbers, and that each \(2 \times 2\) subsquare contain an empty cell is easy to encode if we introduce another Boolean nz variable where nz[i][j] = 1 if and only if grid position \((i, j)\) is non-zero.

This variable feels redundant, as we should be able to just do things like if grid[i][j] > 0 right? Technically yes, but encoding if-else statements directly in an ILP is cumbersome and confusing – the nz variable makes reasoning easier. We’ll see later how z3 addresses this with higher level programming constructs.

|

|

Finally, the entries need to form a connected region. This is the most difficult constraint to encode. If you are confused about what constitutes a “connected region”, take a look at the solution – you can trace a path between any two filled in digits: there are no “islands”.

One idea is to try encode this is to do some kind of connected component labeling , but it seems difficult (to me?) to encode this in an ILP such that it is both necessary and sufficient. That is, it is fairly easy to construct a set of ILP constraints which will be satisfied if there is just one connected component, but going in the other direction (that there is necessarily a single connected component when the constraints are satisfied) seems much more difficult.

An alternative method is to imagine that the cells and their neighbours form a network of pipes, and then model some kind of flow within that network. We can let each non-zero part of the grid consume a single unit of flow, and then designate one of the given filled-in cells as a source with exactly enough units of flow to cover the whole grid. Since there is one 1, two 2s, etc. up to seven 7s, we know there must be exactly 28 non-zero cells.

It is well known that flow problems can be modeled with linear programs, so this approach seems promising for our ILP. The following code does exactly this – the comments should make the code fairly clear. However, it can be subtle.

|

|

This code looks fairly simple, but writing it was quite a challenge. It was particularly difficult to write down the right constraints that ensured only the one designated node could act as a source. In many earlier iterations I failed to properly enforce this and wound up with islands of non-zero cells. I also had a subtle bug wherein I wrote model.Add(outgoing_flow[i][j] == incoming_flow[i][j] - 1) instead of model.Add(outgoing_flow[i][j] == incoming_flow[i][j] - nz[i][j]).

Remark: When I originally solved the 24-7 puzzle (and the 4-in-1 puzzle below) I actually didn’t encode the path constraint condition. Instead, I just encoded a simpler sufficient condition and printed out all of the possible solutions (there were only 3) and selected the correct one manually – an expedient method.

In any case, we can finally print out a solution. I’ve hidden the implementation of the panel_to_str function as it is uninteresting. It just relies on getting the value of variables in the program with the method solver.Value, for example: solver.Value(panel[k][i][j]), etc.

|

|

|

|

And there we have it 😀

Solving the 4-in-1 Puzzle

The previous example with the 24-7 puzzle shows that ILP can be a rather cumbersome approach to encoding constraint programming problems, particularly if you endeavor to directly construct all of the linear inequalities needed to encode your program. In this section, I’m going to use the z3 theorem prover for SMT, which I think is considerably more expressive.

I’ll also be tackling a more difficult puzzle – the 4-in-1 24-7 puzzle. Indeed, the previous section was merely a warm up. The specific rules for the 4-in-1 puzzle is to:

Place numbers in some of the empty cells so that in total each of the four 7-by-7 outlined grids is a legal “Twenty Four Seven” grid. Namely: each 7-by-7 grid’s interior should contain one 1, two 2’s, etc., up to seven 7’s. Furthermore, each row and column within the 7-by-7’s must contain exactly 4 numbers which sum to 20. Finally, the numbered cells must form a connected region, but every 2-by-2 subsquare in the completed grid must contain at least one empty cell.

Some numbers have been placed inside the grid. Additionally, some blue numbers have been placed outside of the grids. A number outside the grid represents either the sum of the row or column it is facing, or the value of the first number it sees in that row or column.

Figure 3: The 4-in-1 24-7 Puzzle

Let’s begin!

|

|

As before, I’m keeping track of the grid values, with a separate convenience array for the subgrids. As opposed to ILP, z3 is expressive enough that I don’t have to directly introduce multiple separate variables for different conditions like nz, panel, etc.

Constraints are set similarly, and can also be tagged. The constraints list will contain tuples of the form (tag, constraint), as the tag can be useful for debugging. You can ask z3 to print out the tags of some set of unsatisfiable constraints (whenever it finds one), so you can track down bugs more easily. Ironically, I didn’t actually make proper use of this feature, since I noticed the bug I was searching for during the process of meticulously tagging each constraint.

|

|

I didn’t set bounds for the variables when I constructed them, so lets do that here. Notice that z3 directly supports convenient constructs like z3.And. There is also z3.Or, z3.Implies, z3.If, and many others – pay attention for these throughout. These functions make it much easier to encode the constraints than having to reason through encoding them with ILP.

|

|

The following lengthy code block implements again the 24-7 puzzle logic, but this time in z3. Since I’ve already explained it, I don’t do so again, except for the comments in the code itself. Consider comparing this code with the ILP implementation.

|

|

This function is now being used as a subroutine – each subgrid needs to be a valid 24-7 puzzle. I specify the flow sources for each subgrid manually and arbitrarily from the fixed given data.

|

|

Now, there are some additional rules described for the 4-in-1 puzzle. Specifically, recall:

Some numbers have been placed inside the grid. Additionally, some blue numbers have been placed outside the grid. A number outside the grid represents either the sum of the row or column it is facing, or the value of the first number it sees in that row or column.

This constraint is a bit confusing, but ultimately we can just encode it with a sequence of nested if/else statements. I’ve constructed this with a recursive function as follows, but one could just as easily write down the constraints “manually” with a keyboard macro .

|

|

We now need to manually write down all the constraints from the figure. I enumerate them in counter-clockwise order, starting from the top-left corner.

|

|

Finally, we instantiate and call the solver.

|

|

|

|

Which is a correct solution! We can also check that the solution is unique by adding the negation of the solution back as another constraint and then re-solving.

|

|

|

|

This proves that the solution I found is unique, and also serves to illustrate the “unsat subset” functionality.

Remark: You can find my name on the solution page of this puzzle, so you know I’m no fraud. But similarly as the vanilla 24-7 puzzle, I didn’t fully encode the entire problem as I’ve done now. Instead, since I only cared about getting to a final result, I manually narrowed down some of the constraints with my own direct reasoning, or through sufficient conditions, and then printed out all the candidate solutions (there were 8) with ILP. I finally found the only correct one ad-hoc, and computed the product of the areas of white space by hand.

Conclusion

We’ve seen how both Integer Linear Programming (ILP) and Satisfiability Modulo Theories (SMT), a generalization of SAT, can be used to solve constraint programming problems. These tools are incredibly powerful, and it seems likely that most practical “day to day” discrete optimization problems I might care to solve can be easily implemented and solved with ortools or z3. I find z3 to be considerably more expressive than ILP, and it is probably a good go-to tool for constraint programming. That being said, there are plenty of mathematical optimization problems which can be encoded as ILPs, so if optimization is the goal, rather than simple constraint satisfaction, ILP might be the right choice.